User interfaces are often the most visceral aspect of software development. Most desk-bound professionals use software for 8-10+ hours per day and as a consequence have opinions and expectations about UI. We’ve all lost our precious time and sanity to poorly designed apps. We’ve also been inspired and awe-struck by novel and engaging user interfaces in the past. Therefore virtually every time we have the chance to build or improve a UI almost everybody in the room will have something to say about it.

Couple this intensity of focus with the belief held by many software developers that design is for right-brained people only and, unless you are in a team with dedicated UI design resources, you can understand why it can be very stressful. The truth is the UI is how most people external to your project will judge it regardless of how great the underlying code is.

Building the user interface for a software product in a team can be a profoundly frustrating or enjoyable experience depending on your context and approach. At Fortigent, we’ve evolved a process which we think works great for our needs and wanted to share it here.

Agile Mindset

You’ll hear a lot about agile on this blog and for good reason. We embrace it because we know from experience that it works much better than waterfall (BDUF) in our context. There’s a lot of noise out there regarding agile and it can be hard to understand its essence.

For me, software development is a creative process; more art than science. From a business perspective, agile is risk mitigation. From a creative perspective, agile is a feedback loop. Case in point:

Our team is always in the agile mindset: we crave feedback, embrace change and fail fast instead of trying to build the wrong thing perfectly the first time.

Clarity of Purpose

“Theirs not to reason why, theirs but to do and die.”- Charge of the Light Brigade by Alfred, Lord Tennyson

If you’re a developer or designer, you’ve heard this questions like this a thousand times from stakeholders: “how hard would it be to make the button open a pop-up window instead?” This is natural; the stakeholder has received or raised a concern, analyzed the problem and proposed a solution.

Building a software product based only on “how hard would it be…” questions is a recipe for failure. If you’re a Developer and are ever asked this, resist all natural urges to go into problem-solving mode and simply ask “why?” Then ask it again.

Only when the team has a deep understanding of the whys should it begin to propose and test solutions. The first idea is almost never the best one, by the way, and that’s OK.

To achieve this you should stop looking at the screen and talk about the real-world end user or business problems at hand. You need an environment of trust where questions are encouraged and time spent by developers discussing issues and white-boarding solutions is not considered to be “wasted”.

If you can’t as a team achieve a deep understanding of the whys just by asking questions then use your feedback loop.

The how is the glue arguably the most important, gets the least amount of praise, and takes the most time and experience to do well. For example, if you hastily implement a UI change to get feedback without properly unit testing or modularizing, when (not if) you are asked to change it, it will likely take you much longer than expected and/or introduce bugs along the way. On the other hand, if you over-engineer a solution to a feature which is eventually dropped from the product, you’ve wasted a lot of time for nothing (YAGNI).

The ability to know how much engineering to apply typically is correlated to how well you know your users and the product vision, not how many technologies you are proficient with.

Our Process

PowerPoint Mockups

Mockups will be created for all relevant UIs for a given feature (usually) long before development work has been prioritized. These are typically done using PowerPoint and iterated on several times. Once some consensus is reached among product owners, these mockups are posted in SharePoint and stuck to the wall as a way to provide a sort of passive hallway usability testing, to let the ideas percolate and to show off a little.

Define software interfaces/contracts

Developers reach rough consensus on an implementation path, then define the contracts between the UI layer and the business logic (in our case ViewModel and Command objects) so that they can be worked on and unit tested in isolation. These are not set in stone of course.

Fully-functional UI with realistic states

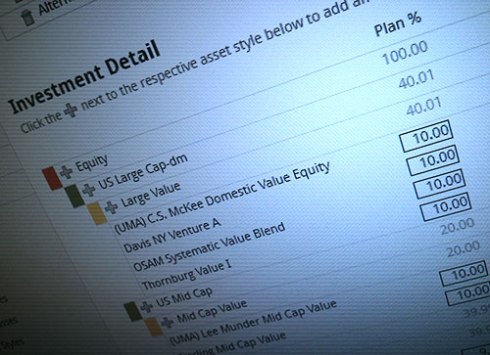

When building the UI layer we use copious amounts of semi-realistic fake data. The goals here is to get feedback so the more interaction can be built for real the better. Most of what we do involves financial data analysis and/or forms (CRUD) so we make sure our fake data covers these possible states:

- Big Data: Can the table handle thousands of rows or does it need pagination/infinite scroll/etc.? Can the UI handle a very long name or very large dollar figure?

- Bad Data: Have we accommodated all input and security validation scenarios? These are often overlooked and can become quite complex. Any QA professional will tell you it’s the first thing they think about so it should be yours too.

- No Data: Have you designed for cases where there will be no available data at all?

- Devices: Is the app usable on relevant browsers and mobile devices? At multiple pixel densities, screen sizes and orientations?

Real server-side implementation

While the UI is being built we’re also building out the server-side implementation in parallel, taking all the usual things into consideration like performance, adaptability and security. This is whole other word of constraints and challenges. Checkout Andriy Volkov’s post to learn more about some of the recent developments in this area.

Wire up the UI to real data

After some iterations on the UI and after the real server-side implementation is available we simply wire them up (usually it’s a one-line code change) and voilà.

It is now time for more demos, testing and feedback. After that, usually a few rounds of consistency checks and polish are necessary before we can release the app to our customers.

Separation of Concerns

Tools like ASP.NET MVC on the server side a Knockout.js enable us to provide clean separation between our data, or logic and our user interface. We don’t stop there however.

On the server side, we do things like avoid MVC pitfalls and apply various design and architectural patterns to further separate our concerns and achieve SRP. We use Action Filters for cross-cutting concerns like security and unit test like crazy.

On the client side, we work to separate our structure (HTML) from our data (JSON) from our styling (CSS) from our logic (JavaScript). We avoid mixing DOM-specific code into our JavaScript application logic so that it can be more easily unit tested using QUnit. We also try to keep our code DRY and build re-usable jQuery plugins or Knockout Binding Handlers at the first sign there is an opportunity to share the love.

In short, we separate our concerns a LOT because it’s really what makes software… soft.

Feature Toggles

We use continuous integration and frequent releases so how do we manage to iterate on a feature over longer periods of time? Our answer has been feature toggles. Basically, we use configuration to hide work-in-progress features, enabling us to demo them and not worry about feature branches. This can get tricky with major data persistence changes but it’s manageable.

Always Learning

Where we are now has been the result of a learning process and will never be perfect. Here are a few ways I think we can improve.

Nothing is Intuitive, Only Familiar

We work at a rapid pace. We have a flat organization and many senior developers with strong opinions and no dedicated team of UI designers. Achieving 100% visual design, interaction design and information architecture consistency in our larger applications has been a challenge. We encourage innovation so if a developer finds or makes a cool new UI widget (say for an auto-complete drop-down menu with spell check and voice recognition) and implements it to make a great user experience, then great!

There may however eventually be temptation to implement that shiny new menu across the application for the sake of consistency. So it’s important that we as developers recognize this, especially when under pressure, to not get attached to our creations and keep the importance of consistency in mind.

Managing Expectations

“It’s not an iteration if you only do it once”- Jeff Patton

The one downside of building the UI with fake data first is that some people think it’s done! It is far from “finished” (software, like many things, is rarely ever finished) in fact. Sometimes we get so excited about a new UI that we wind up showing it off to external folks before it’s even been through a single iteration. We need to do a better job of explaining the creative process and inviting more people to contribute to it.

Managing Feedback and Design

“If everything is important, than nothing is”- Patrick Lencioni

We’re very skilled developers and super confident in our ability to build the thing right. We crave feedback to make sure we’re building the right thing.

However, just because we want this precious feedback doesn’t mean we’ll always use it. That goes back to the basics of design and product management. That’s a much bigger topic but the point is we must strive to distil the feedback into short-term and long-term plans. This sometimes means saying “no” which can be very awkward for people who excel at bending over backwards for customers.

Measure It!

“If you cannot measure it, you cannot improve it.”- Lord Kelvin

A core part of HCI and UX is to empirically understand what works for users and what doesn’t.

We can build a UI that we love internally but if we never collect data on their experiences than we are ultimately are missing the mark. We could benefit greatly from spending more time with end users, performing usability tests and A/B testing to guide our decisions.

Nothing gets a developer more engaged with their work then watching a customer use their app.

Conclusion

Fortigent has evolved a process to build software products with rockstar ninja guru hipster awesomesauce user interfaces. The keys to this have been the feedback loop, clarity of purpose, separation of concerns and feature toggles. Thanks for reading!

– Tim Plourde

@timplourde